Online targeting has far-reaching offline consequences.

Social media can be an empowering tool for people around the world, but government officials and private individuals across the Middle East and North Africa (MENA) region are using these platforms to target lesbian, gay, bisexual, and transgender (LGBT) people. Read the full report.

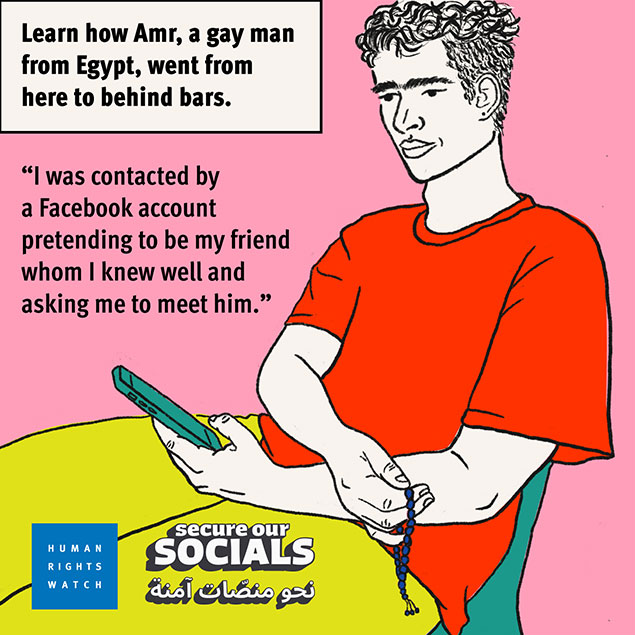

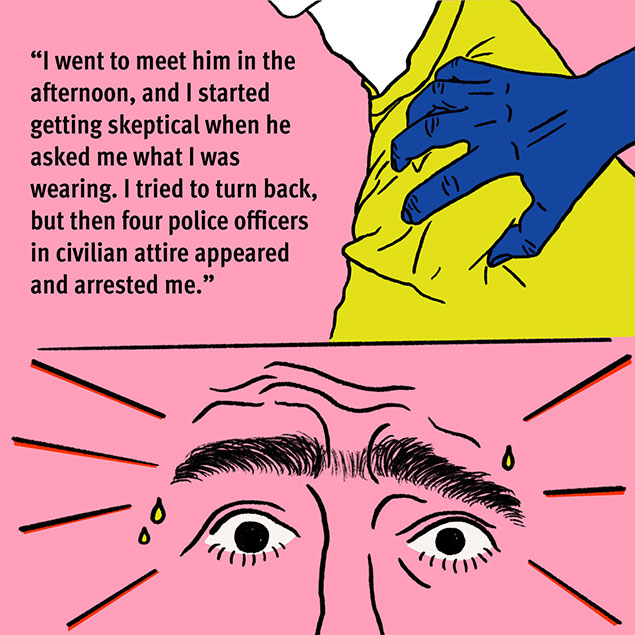

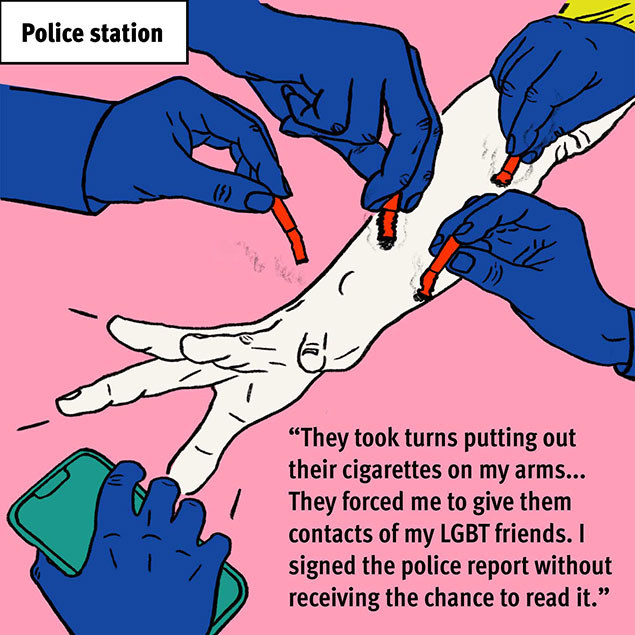

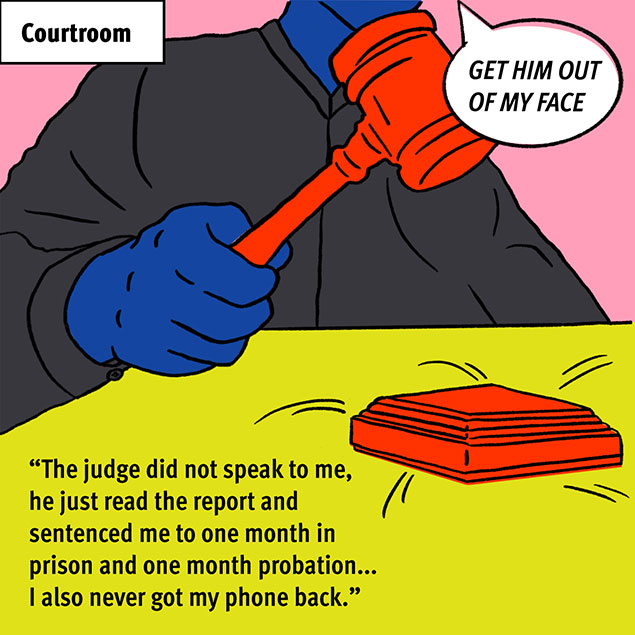

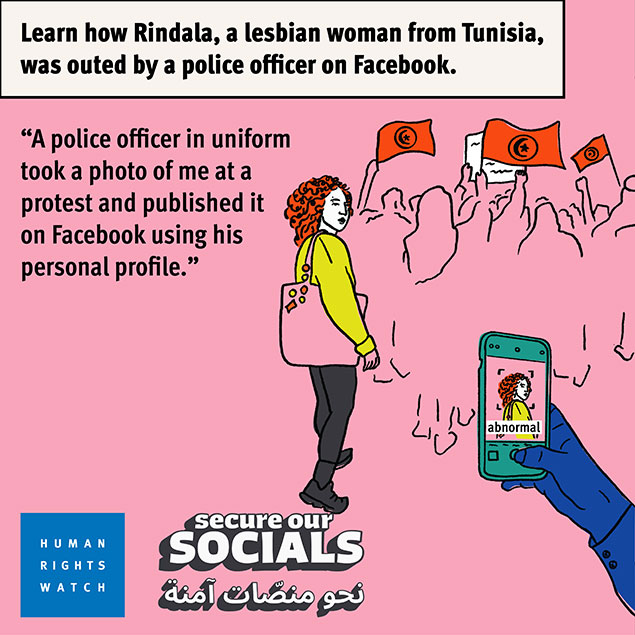

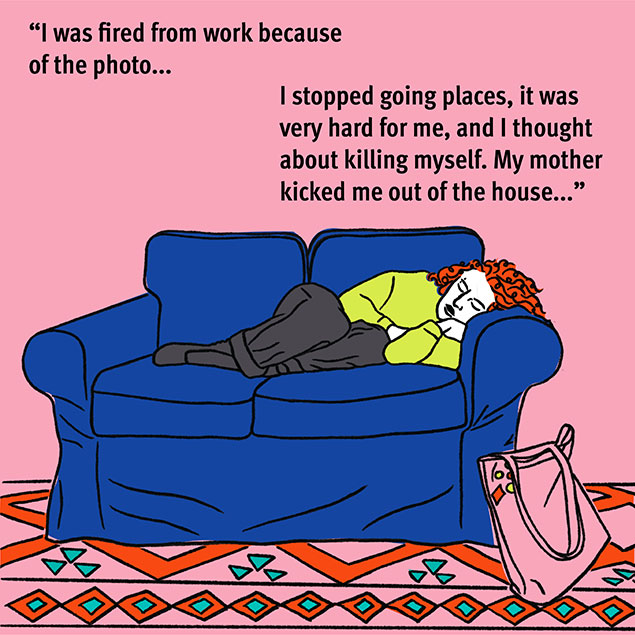

Security forces have entrapped LGBT people using fake profiles and chats, and subjected them to online extortion, harassment, doxxing, and outing in public posts on Facebook and Instagram. In some cases, those targeted are arbitrarily detained, prosecuted, and tortured for same-sex conduct or “immorality” and “debauchery” based on illegitimately obtained photos, chats, and similar information. Download our awareness tips to learn more about how to mitigate the risks of digital targeting.

Masa, a 19-year-old transgender“I have received threats on various social media platforms from armed groups and high-ranking official army men. They specifically target people like us, to hunt us down and kill us.”

woman from Najaf, Iraq.

Human Rights Watch documented the use of digital targeting by security forces and its offline consequences in Egypt, Iraq, Jordan, Lebanon, and Tunisia. The report is based on 120 interviews, including 90 with LGBT people affected by digital targeting and with 30 expert representatives such as lawyers and digital rights professionals.

Recognizing that state actors are the primary perpetrators of digital targeting against LGBT people, the report calls on governments in the MENA region to protect LGBT people instead of criminalizing their expression and targeting them online.

Read

the

true

stories.

Amr’s Story

Rindala’s Story

Human Rights Watch’s report documented Meta platforms as a vehicle for targeting of LGBT people in the MENA region.

As the largest social media company in the world, Meta should always be responsible for the security of users on its platforms – including by protecting them from egregious offline harm. More rapid, accountable, and transparent content moderation practices can improve Meta’s handling of complaints that are likely to lead to real-world harm. Some types of harmful online content, for example exposing the sexual orientation or gender identity of an individual, pose a heightened risk of harm and need to be addressed expeditiously. Read our full letter to Meta executives.

The #SecureOurSocials campaign is also calling on Meta to improve its user safety features among other asks:

Disclose its annual investment in user safety and security including reasoned justifications explaining how investments are proportionate to the risk of harm.

Disclose data about the number, diversity, regional expertise, political independence, training qualifications and relevant language proficiency of staff or contractors tasked with moderating content originating from the MENA region.

Implement a one-step account lockdown tool allowing users to wipe all Meta content on a given device.

Download our awareness tips to learn more about how to mitigate the risks of digital targeting.